Have you ever wondered what makes today's most impressive AI, like ChatGPT, tick? It's really quite something, you know, how these advanced systems seem to understand and generate human-like text with such ease. Well, at the very heart of this incredible capability lies a foundational architecture known as the Transformer. Since Google first introduced the Transformer in 2017, it has, in a way, become a powerful symbol of a new era in artificial intelligence.

This single innovation sparked a truly remarkable wave of progress, with language models based on its design popping up everywhere, it seems. Models such as Bert and T5 quickly gained a lot of attention. Then, of course, the globally popular large models like ChatGPT and LLaMa truly shone, showcasing just how much the Transformer could do. It's almost as if this architecture gave AI a whole new set of possibilities, allowing it to tackle problems that once felt out of reach.

So, when we talk about the "transformer symbol," we're not just looking at a picture or a logo. We're thinking about what this architecture represents: a pivotal moment in AI development, a testament to clever design, and the engine behind many of the smart tools we use today. It’s a bit like the blueprint for a new kind of intelligence, really. This article will help you get a better grip on what this powerful symbol truly means for the world of AI.

Table of Contents

- The Birth of a New Era in AI

- How the Transformer Works: A Glimpse Inside

- The Transformer as a Universal Tool

- Evolution and the Path Ahead

- The Transformer Symbol in Practice

- Frequently Asked Questions

The Birth of a New Era in AI

The story of the Transformer, in a way, really began with a groundbreaking paper from Google in 2017 called "Attention Is All You Need." This paper, quite frankly, put the concept of Attention mechanisms front and center, pushing them to their absolute limits. It introduced a model, the Transformer itself, which moved away from older, more traditional neural networks like RNNs and CNNs. Instead, it built its entire structure around an encoder-decoder setup, relying solely on Attention. This was a pretty big deal at the time, actually.

Before the Transformer, models often struggled with processing long sequences of data efficiently, a common challenge in areas like machine translation. RNNs, for instance, processed words one after another, which could be slow and lose information over long sentences. The Transformer, however, brought a fresh approach. It allowed the model to look at all parts of a sentence at once, which was, you know, a game-changer for speed and context. This shift laid the groundwork for the large language models we see today.

It’s fascinating to think about how the idea for these large models, like GPT and BERT, really started brewing around 2018. That year, two very important deep learning models came into existence: OpenAI’s GPT, which focused on generative pre-training, and Google’s BERT, which used the Transformer’s bidirectional encoder. These early models, in a way, signaled what was coming next, setting the stage for even bigger things in AI.

How the Transformer Works: A Glimpse Inside

To truly appreciate what the Transformer represents, it helps to get a general idea of how it functions. It's often thought of as a "universal translator" for neural networks, and that's a pretty good description. At its core, the Transformer, which is the architectural backbone for modern AI models like ChatGPT, was first designed for machine translation tasks. Its main trick is something called the Self-Attention mechanism, which lets it figure out how all the words in a sentence relate to each other, all at the same time. This is, you know, quite a clever way to process information.

Encoder and Decoder at Its Core

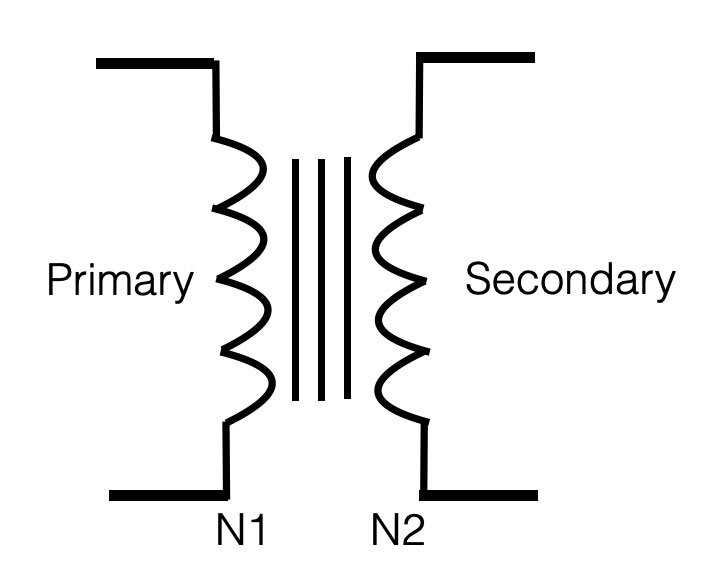

The overall structure of the Transformer, you can see, is divided into two main parts: an Encoder on the left and a Decoder on the right. Both the Encoder and the Decoder are made up of six identical "blocks." This modular design means that the Transformer can process input information and then generate output in a very organized way. The Encoder takes the input sequence, say a sentence in one language, and turns it into a rich representation. Then, the Decoder uses that representation to create the output sequence, perhaps the translated sentence.

The way the Transformer works generally goes like this: input data enters the Encoder, which processes it through its layers. This processed information then gets passed to the Decoder. The Decoder, in turn, uses this information, along with its own previous outputs, to generate the final sequence. It’s a pretty streamlined process, really, allowing for parallel processing which makes it much faster than older methods.

The Magic of Self-Attention

The real secret sauce of the Transformer, the thing that makes it so powerful, is its Self-Attention mechanism. Unlike older models that might look at words one by one, Self-Attention allows the Transformer to weigh the importance of every other word in the input sequence when processing a single word. For instance, if you have the sentence "The animal didn't cross the street because it was too tired," Self-Attention helps the model figure out that "it" refers to "the animal." This ability to understand context across an entire sentence simultaneously is, honestly, what gives the Transformer its remarkable capabilities.

This mechanism is what lets the Transformer handle variable-length data so effectively, something that RNNs struggled with in a clear, easy-to-see way. Self-Attention gives the Transformer a way to "see" the whole picture at once, rather than piecing it together word by word. It’s a bit like having a bird's-eye view of a sentence, which is, you know, very helpful for understanding meaning.

The Transformer as a Universal Tool

While the Transformer first found its footing in machine translation for NLP tasks, its usefulness is, apparently, quite broad. Its design is very general, meaning it can be adapted for many different purposes. Beyond just language, with some modifications, it can even be used in the visual field. These characteristics have made the Transformer a truly versatile tool in the AI world.

Beyond Language: Vision and More

One of the most exciting developments is the Vision Transformer (ViT). This is a modified version of the Transformer that applies its principles to image processing. Typically, Vision Transformers start with a "Patch Partition" operation on the input image, which is a common way to break an image into smaller pieces, much like how a regular Vision Transformer would cut up a picture. These pieces then go through a linear mapping before entering the first part of the Transformer. This shows how adaptable the core idea of the Transformer really is.

The main differences between the Transformer and older convolutional neural networks (CNNs) are pretty significant when it comes to how they handle data, pull out features, and what they're used for. For data processing, the Transformer uses Self-Attention, which lets it process inputs in parallel, unlike CNNs that typically use local filters. This difference in approach means they excel in different areas, but the Transformer's ability to see global relationships in data is a distinct advantage for many tasks.

Tackling Different Problems, Like Regression

The Transformer's flexibility extends even to tasks like regression problems. Regression is a type of supervised learning where the goal is to predict a continuous value. These kinds of problems often involve predicting numbers, like house prices or stock values. The Transformer architecture can be adjusted for these tasks, showing its broad applicability across various machine learning challenges. It’s quite impressive, actually, how it can be tweaked for so many different kinds of predictions.

Evolution and the Path Ahead

The Transformer, as a large model, typically gets better performance by simply growing in size and using more pre-training data. There are, of course, some exceptions, like DistilBERT, which aims to be smaller. For example, GPT-2 was built using just the "transformer decoder module," while BERT used the "bidirectional encoder." This shows how different parts of the Transformer can be used to build distinct models, each with its own strengths.

Handling Long Sequences

One area of ongoing development for the Transformer is handling very long sequences of data. Transformer-XL, for instance, introduced a special architecture that allows it to learn dependencies that go beyond a fixed length, all without breaking the flow of time. This means it can use information from the current input as well as previous inputs, allowing it to remember more context over longer periods. This is a very important step for applications that need to understand long narratives or complex code.

There's also a lot of work being done on "Transformer upgrade paths," exploring new ways to extend its capabilities. This includes ideas like "infinite extrapolation" with ReRoPE, or even "inverse Leaky ReRoPE," and combining it with other techniques like HWFA. It seems that with a bit of pre-training, the Transformer's performance on long sequences can still improve quite a bit, which is, you know, really promising for the future.

New Competitors and Improvements

The field of AI is always moving forward, and new architectures are always being explored. Mamba, for example, is a newer research focus that offers some compelling advantages. Compared to Transformer models of a similar size, Mamba boasts five times the throughput. What's more, a Mamba-3B model can achieve results comparable to a Transformer that's twice its size. This combination of high performance and good results has made Mamba a hot topic for researchers, showing that the journey to better AI is, you know, always continuing.

The Transformer Symbol in Practice

The Transformer architecture has, in a way, truly become a symbol of how far AI has come. It’s the engine that powers many of the sophisticated language models we interact with daily, from generating creative text to answering complex questions. Its ability to process information with Self-Attention, understanding context like never before, has opened up countless possibilities. It has, quite frankly, redefined what’s achievable in areas like natural language processing and even computer vision.

Yet, despite its widespread use and influence, truly grasping the deeper workings of the Transformer can be a bit challenging. It's often said that out of a hundred people who claim to have studied the Transformer, perhaps fewer than ten genuinely understand it. Even if you've published a paper using a Transformer-based model or fine-tuned one, some fundamental questions about its mechanics can still be, you know, quite tricky. This just goes to show the depth and ongoing complexity of this powerful architecture.

The Transformer, then, is more than just a piece of code; it represents a significant leap in how machines learn and interact with information. It's a symbol of AI's rapid progress and its potential to continue reshaping our world. To learn more about the original paper that started it all, you might want to check out "Attention Is All You Need" on arXiv.

We hope this exploration has helped you appreciate the profound impact of this architecture. Learn more about AI breakthroughs on our site, and perhaps you'd like to explore other foundational models that are shaping the future of technology.

Frequently Asked Questions

What is the main idea behind the Transformer model?

The main idea is that it uses a mechanism called Self-Attention to process all parts of an input sequence at once, rather than one piece at a time. This allows it to understand relationships between words or data points across the entire sequence, which is, you know, very efficient for tasks like translation or text generation.

How did the Transformer change AI compared to older models like RNNs or CNNs?

The Transformer really changed AI by moving away from the sequential processing of RNNs and the local focus of CNNs. It brought in parallel processing through Self-Attention, which made it much faster and better at handling long-range dependencies in data. This was, in a way, a significant step forward for building bigger, more capable models.

What are some well-known AI models that use the Transformer architecture?

Many famous AI models today are built on the Transformer. Some of the most well-known include Google's BERT, OpenAI's GPT series (like GPT-2 and the models behind ChatGPT), and Meta's LLaMa. These models, you know, have really pushed the boundaries of what AI can do in terms of understanding and generating human language.

Detail Author:

- Name : Kiley Sipes

- Username : amya79

- Email : fidel70@yahoo.com

- Birthdate : 1973-10-26

- Address : 3762 Sylvan Walk West Mafalda, MS 03060-0938

- Phone : 1-520-295-7156

- Company : Strosin and Sons

- Job : Editor

- Bio : Dolor et eos et. Est dolorem et accusantium consequatur. Rerum vero ab eius pariatur quam cum minus.

Socials

instagram:

- url : https://instagram.com/jude.parisian

- username : jude.parisian

- bio : Dolor qui ipsum veniam sed doloribus eos explicabo sit. Eum facere facere quam ut debitis.

- followers : 6571

- following : 1032

facebook:

- url : https://facebook.com/jude_real

- username : jude_real

- bio : Soluta soluta aut accusamus ipsum eum voluptas omnis.

- followers : 546

- following : 2909

tiktok:

- url : https://tiktok.com/@parisian2002

- username : parisian2002

- bio : Qui ut necessitatibus perferendis reiciendis adipisci dicta.

- followers : 6558

- following : 647